От халепа... Ця сторінка ще не має українського перекладу, але ми вже над цим працюємо!

What is Voice User Interface (VUI)?

Volodymyr Khmil

/

CEO

8 min read

Voice user interface allows users to interact with the system using voice commands. It’s like GUI but is VUI — this is the most concise description of what we will be talking about today. So, it’s time to understand what voice commands stand for and be the first to adapt to voice control applications.

Article Content:

What is voice user interface, and why is it so different from the GUI?

Let’s jump right into what voice user interface and commands mean.

Until recently, the control over all systems, whether it was an application or a satellite, took place in a graphical user interface. If you needed to make a purchase in the app, you would choose the number of goods, the color of the item, and the delivery address directly through the app using buttons, sliders, or text boxes.

But all technologies are becoming more simplified all the time. And this is designed to make our lives easier. According to a report, 1 in 4 US adults owns a smart speaker, which equals 60 million people across the country now. Also, imagine this: one-third of the US population uses the voice search features in their devices.

People seek hands-free and efficient interactions that are more “human” in their nature when accessing user interfaces. “Speech is the fundamental means of human communication,” says Clifford Nass, Stanford scientist and co-author of Wired for Speech, “…all cultures persuade, inform, and build relationships primarily through speech.”

So, it’d be worth integrating speech into mobile application development. The golden rule is: the easier it is for the users to take action, the more they will use your app.

But why do many people still choose to perform actions with their fingers instead of via voice? The truth is that a computer doesn’t think like a person. It can perform complex mathematical calculations in a split second, which would take a whole team of specialists hours. Yet, it’s difficult for a computer to grasp the tone of the conversation and extract from it the wishes of the speaker.

Let me give you a quick example:

– Would you like coffee with milk?

– With a coconut one and honey.

It’s the easiest dialogue for anyone, which includes:

- The affirmative answer.

- The choice of milk, namely a coconut one.

- An additional request to add honey or even replace the sugar with honey.

However, it would be very challenging for a computer to render all these nuances from an ordinary conversation.

Recently, with the development of technology and computing power, machines have come closer to understanding what humans want. We’ve developed devices that perform real-time translation and transcription of our wishes. Sometimes the results are clumsy and need correcting, but it is enough to communicate with most apps.

That’s why voice assistants and VUI have become popular in the last few years. So the commands have reached a whole new level of quality. You no longer need to open the app, choose food, delivery, and then place the order. All you need now is to say explicitly, “order pasta carbonara with double cheese on 29 Main Street.” And if this information is not enough to place an order, the system will fill in the missing data. It feels like you’re ordering pasta over the phone.

Why is voice user interface more important than you think?

Although most systems already use voice UI and can interpret voice commands, only a small number of apps actually use this functionality.

Home assistants — the devices available for your home or office that respond to your voice commands — are becoming more popular. Most tech heavyweights have already released their own versions of home assistants. You probably have heard about Apple’s HomePod and Amazon’s Alexa, and more. The competition on the market is steadily growing, while the blue oceans are turning red (Editor’s note: `Blue Ocean Strategy` is a book written by W. Chan Kim and Renée Mauborgne, professors at INSEAD, and the name of the marketing theory detailed in the book).

Let’s take the same example of food delivery and voice UI. When sitting at home, you are more likely to use a home assistant to order dinner. What’s more, you’ll obviously use an application that is compatible with the device. If that’s convenient for you to use this app at home, with great probability, it’ll be more convenient for you to use it outside the house. All because it’s unlikely that you’ll use several apps performing the same actions. Also, consider the influence of word-of-mouth, which encourages your friends to use the same application; the global situation resulting from COVID-19 has also meant that more people are working from home. Staying home has become a significant catalyst for the use of home assistants — I’ve personally bought some and see several of my friends following my example.

Besides, except for the convenience of using home assistants, the use of the apps tends to be simpler.

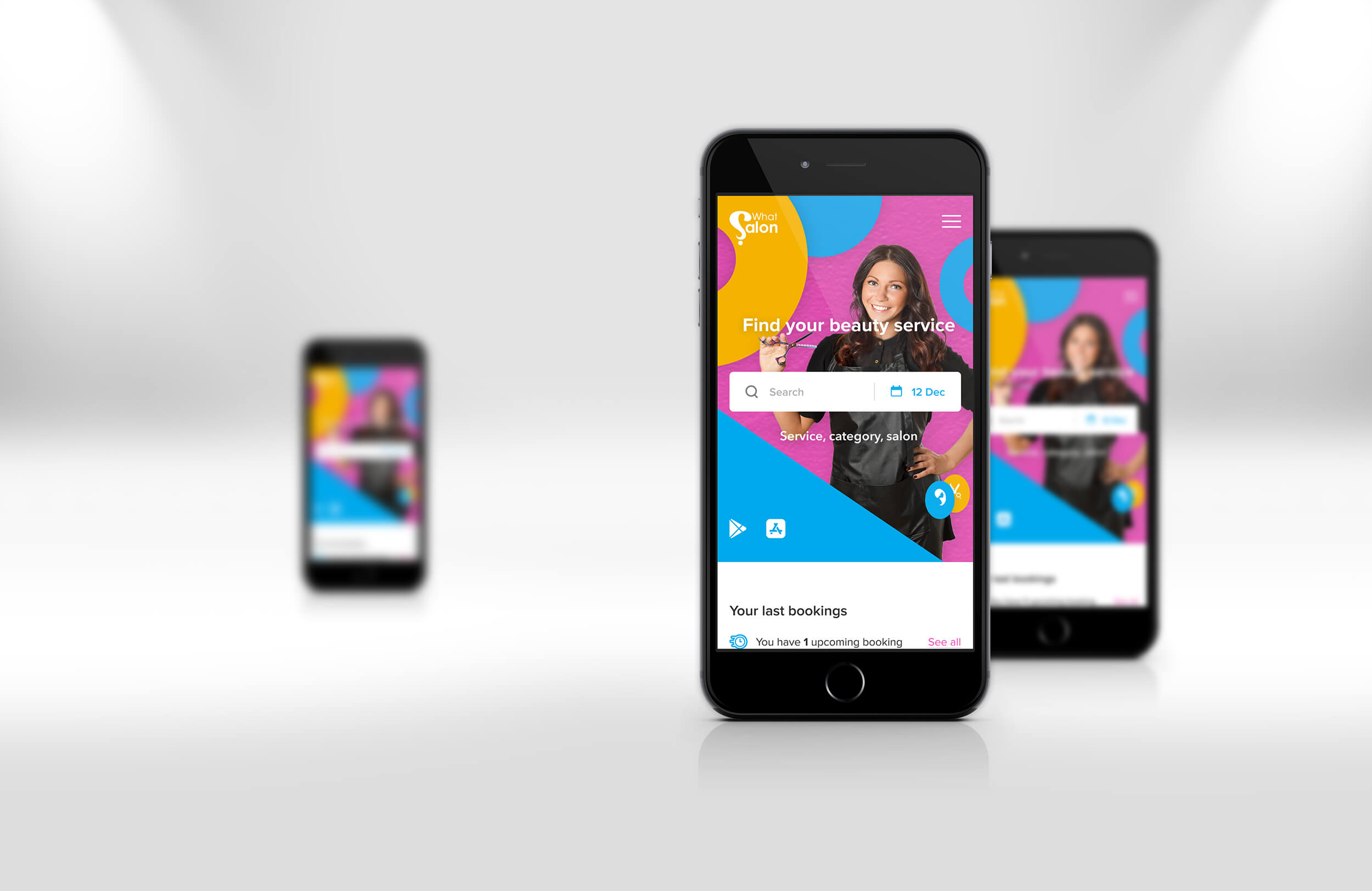

Check out the booking system for a beauty salon with Voice Assistant we’ve developed >

The beauty salon clients book visits and services occasionally, usually in advance. The actions with the bookings are quite regular, but still, the users have to do a lot of work to place the order. They unlock the phone, open the app, find the proper order, customize it, and only then confirm. But what is the best way to simplify the process? Voice commands, of course. Do you want to postpone your booking? Just say the most suitable date. Need to cancel your appointment or place the same order as last time? Just tell the app to do it.

These little things determine how easy your application is to use. Again, the simpler the app is, the more popular it will become.

That is why I consider the voice user interface to be underestimated. Often a whole army of designers introduces all the latest options and features to simplify the app via its graphical interface but fail to add voice UI

What are the existing systems for voice commands?

Developing your own voice assistant is a long and challenging process. What’s more, it is increasingly difficult to make it competitive with the existing ones. That is why if you decide to add VUI to your product, I suggest you pay attention to ready-made solutions.

Some of the most popular voice assistants are Siri by Apple, Alexa by Amazon, and Google Assistant. They are all similar in functionality and work equally well. So, let’s have a closer look at the VUI design.

You might also like to read `10+1 Steps to Building UI/UX Design for Startups`>

What should you look for when building a voice UI?

By now, you’re probably wondering how VUI is different from GUI and why you need to change the design completely to add voice control. The answer might surprise you. You need to change both the design and the whole approach to mobile application development.

The difference between VUI and GUI lies in the perception of information. We grasp it differently depending on the way we receive it, visually (eyes) or aurally (ears).

Let’s explore exactly how this works: if you speak to users and ask ten questions, they will most likely not answer them. Instead, they will get confused. But if they receive a printed or written questionnaire, this task will become quite simple.

Here are seven rules to follow when planning voice interface design:

1. Tell the users where they are at each step of the user journey. In the graphical interface, users see a “Next” button and text with instructions. But not so with voice UI. Users can easily get lost in the flow or mistakenly go off on a tangent. So, they need to be informed of what actions the system expects from them.

Take a look at this dialogue:

– What is the weather like?

– It’s sunny today.

The answer makes it clear that the forecast is for today. In the graphic interface, the users would simply go to the section called “day” instead of “week.” So, this voice analog allows them to understand the section they are in by default.

2. Present the information in the way you want the user to provide it to you. You may not notice, but we often shorten phrases and use slang when talking. Still, the meaning remains the same as in the full expression.

Let me give you an example: the phrases “Is anyone there?” and “Is anyone home?” carry the same meaning, but the first one is much shorter and more commonly used. Users will make similar shortcuts and say “book a haircut” without specifying the time and service when scheduling a haircut via voice assistant, and it will be an incorrect query.

To reduce such issues, teach your users to use full statements when they make a request. Program your voice assistant to answer in full sentences. Then, the users will automatically switch to the same conversation style and give all the information in the way the voice assistant requires it.

3. Limit the amount of information the user receives. In the graphical interface, the users clearly see what they need to do, which options they have, and what additional instructions are available. But with voice UI, users can only perceive and memorize a limited amount of information.

So, if you give the users ten options to choose from, they’ll forget the first one when you talk about the fifth. Amazon suggests giving no more than three options.

But what if there are more options? It’s a no-brainer: cluster the options together into similar categories and let the users first select a group, and only then can they choose the desired option from this group. And be careful because a confusing cluster will make the users run back and forth to find what they need. There is also another option: give the users a choice of three that most likely suit them and the option to choose from the rest.

In short, be careful how you present information in voice UI because users quickly start to get nervous when they get asked a million questions for a simple action: “If you agree, press 1, if you want to listen, press hash.”

4. Communicate with the user about what is happening. In the graphical interface, the users see how the application responds to their actions. But using a voice interface is like talking on the phone and not hearing anything in response. Thus, the users must understand that the application listens to them or processes the data. For example, if you have booked a haircut, the voice assistant must repeat the selected details.

5. Handle mistakes elegantly. Unfortunately, voice commands can still make errors, and the voice-text rendering may not be perfect. Be prepared for this and create an advanced error handling system.

Here’s a list of the most common errors with the voice user interface:

- The language can be translated incorrectly.

- The user sentence is incomplete.

- The information provided is completely incorrect.

- The user request cannot be made.

6. Remember to include an additional level of security, especially when working with sensitive data such as payments. To avoid problems, users must authorize their actions. They can proceed with it in many ways: scan their face or finger, open the application, or enter the code.

7. Don’t get carried away. Not all functions have to be controlled by voice. Suppose you are too excited about adding VUI everywhere. In that case, you will get the same result as with applications that can simultaneously record notes and send rockets into space — useless and ridiculous. With voice control, it is even worse. The users won’t see what is happening and will get confused.

So, do some detailed research and highlight the most frequent user queries. Then, make a map of the voice UI. Finally, ask yourself and answer honestly whether VUI will simplify the users’ actions or make them more complicated.

Conclusions

In a nutshell, the voice user interface has exploded in popularity in recent years. Siri and Amazon’s Alexa have made VUI a global trend. This means that voice UIs use speech recognition technologies to understand users and give them what they want. Hopefully, after reading this article, you will consider the benefits of finding your voice with VUI.

Although Voice UI is a powerful technology, it’s still not 100% perfect, with refinement ongoing. Yet, I am sure that VUI will become a must-have feature of every application over a quick time.

So, if you want to be on the crest of an innovation wave and implement VUI right now, contact us for a project estimate or consultation.