От халепа... Ця сторінка ще не має українського перекладу, але ми вже над цим працюємо!

Supercharge Your AI with RAG: A Startup Founder’s Guide to Real-Time, Data-Driven Intelligence

Vasyl Khmil

/

CTO

7 min read

Startups are always looking for smarter ways to integrate AI into their offerings. One of the most transformative AI innovations is retrieval augmented generation (RAG). But what exactly is RAG? Can it empower your business? This article explains RAG in simple terms, explains why it matters, and describes a real-world case study: NERDZ LAB’s own innovative approach to creating more accurate project estimates.

Article content:

Introduction: Bridging the Information Gap with RAG

Large language models (LLMs) like GPT-4 have revolutionized AI, offering the impressive capability of generating human-like language. However, even the most advanced LLMs have limitations. One major limitation is that the vast datasets they’re trained on are static, so they might contain outdated information or lack data entirely for specific companies or industries.

Imagine asking your AI virtual assistant about your company’s internal sales figures or its latest HR policy—chances are, the model won’t know anything about those data.

This is where RAG steps in. RAG, or retrieval augmented generation, lets you “supplement” the static knowledge of an LLM with fresh, relevant data. In essence, it’s like giving your AI a cheat sheet with the latest and most specific information that your business needs.

What is RAG in AI?

At its core, RAG involves two components that enhance an AI model’s responses by adding new information the model can use:

- Retriever: The retriever searches and collects relevant information from a new data source. Whether it’s your company’s internal documents, user-generated content, or the latest news, the retriever finds new data most pertinent to your query.

- Generator: Your generative large language model (LLM) uses new data it gathered, along with its pre-trained knowledge, to produce an informed and accurate response.

By combining these two functions, RAG ensures that AI models don’t rely solely on a pre-trained dataset. Instead, they can also tap into a reservoir of more current information whenever needed. This makes RAG a powerful asset in AI development.

Why RAG Matters for Startup Founders

For startup founders, every business-related decision is critical. You need insights that are accurate and tailored to your unique business context. Here’s why RAG is a game changer for AI development.

1. Access to real-time, relevant data

LLMs are excellent at providing general answers but falter when asked about niche or very recent topics—especially topics unique to your company. RAG allows you to integrate specific, up-to-date information (such as your latest sales figures, HR policies, or customer feedback) for use in your AI’s responses. This ensures that every answer is based on the most current data available.

2. More cost-efficient than retraining

Training an AI model (LLM) from scratch or even fine-tuning one with your proprietary data can be prohibitively expensive and time-consuming. Instead of retraining, RAG enhances models on the fly. This approach saves you from pouring resources into modifying the AI model’s core knowledge; you simply provide tailored supplemental data that enhances its performance, making it faster and more economical.

3. Overcoming the context window limitation

LLMs have a limit on how much information they can process at one time. This means that even if you had all your data in one place, the model might not be able to handle it all at once. RAG sidesteps this issue by pre-selecting and sending only the most relevant information from your dataset to the model. Think of it as selecting just the golden nuggets needed for each query.

4. Versatile use cases across industries

Whether you’re building a chatbot to answer customer queries, a tool to provide personalized financial advice, or an internal assistant that keeps track of HR policies, you can adapt RAG to suit your needs. Its versatility makes it a powerful asset for any startup looking to integrate AI into its operations without constant retraining.

Read also: AI use cases in healthcare: Real apps with actual value

The Technical Workflow of RAG

Let’s get a little more technical and understand the step-by-step process behind RAG and its role in AI development:

Step 1: Query input

- User query: The process begins when you submit a query. This query, or problem for the AI model to solve, is the starting point for the RAG process.

Step 2: Retrieving relevant data

- Embeddings and vector databases: Algorithms translate your custom data — whether it’s product descriptions, internal reports, or historical project estimates — into numerical representations, called vector embeddings, that capture the meaning of the text.

- Storage: The vector embeddings are stored in a custom database called a vector database.

- Similarity search: A search retrieves documents from the vector database that are most similar to the query, which was also converted into an embedding.

Step 3: Fusion and augmentation

- Combining information: The RAG process combines the documents retrieved by the search with the original query. The resulting “fused” embedding, comprised of both the query and the context-specific data from your custom database, becomes the AI input.

- Passing to the LLM: The enriched input is fed into the advanced generative AI model. The model, such as GPT-4, now has access to both its pre-trained knowledge and your specific, custom data.

Step 4: Generative response

- Final Output: The LLM composes and delivers a coherent response to the user.

Step 5: Performance optimization

- Caching and fine-tuning: You can cache frequent queries to further enhance performance. You can also adjust the retriever threshold to ensure that the AI model considers only the most relevant information from your data.

NERDZ LAB Case Study: RAG in Action

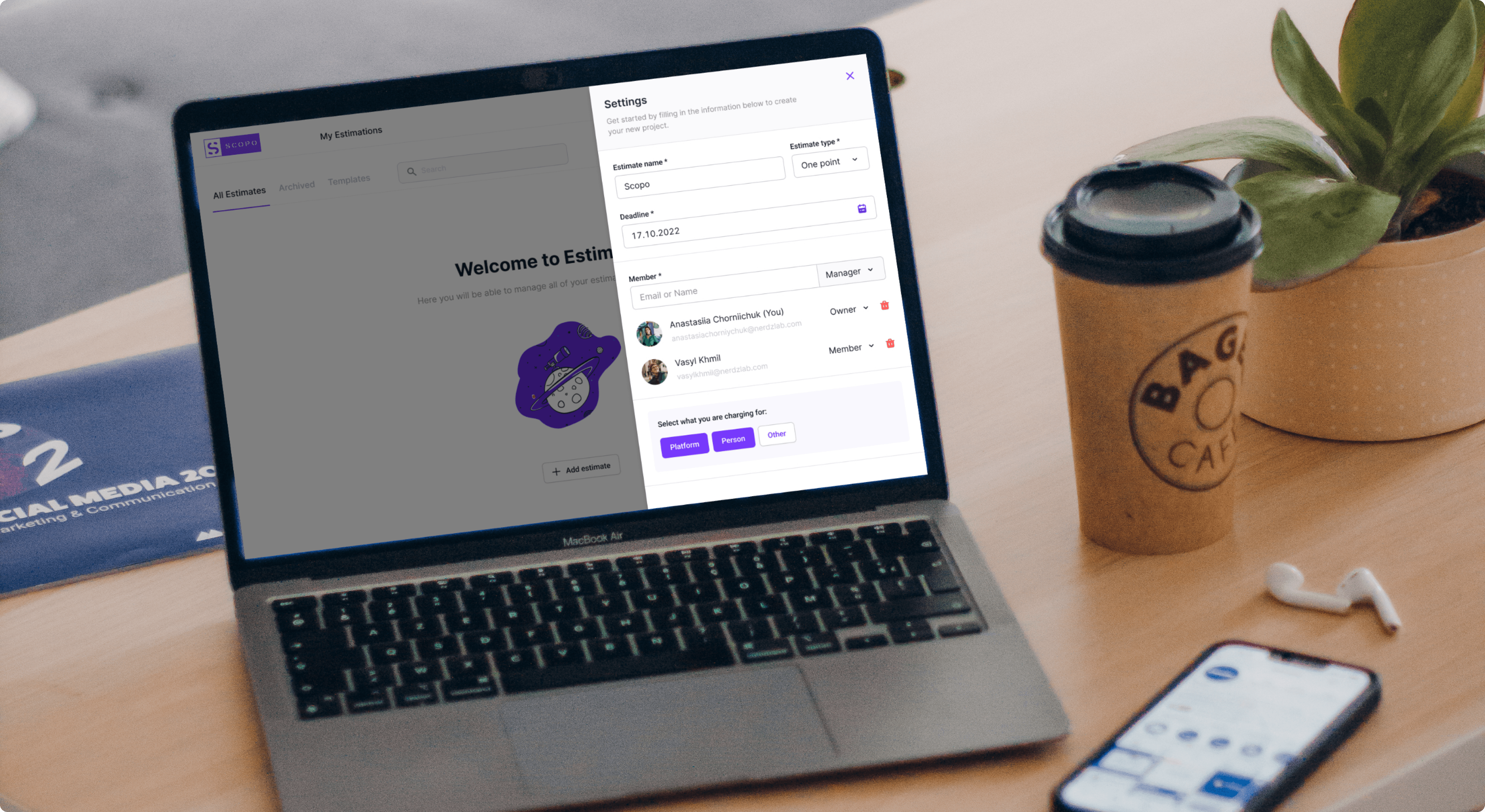

At NERDZ LAB, we’ve successfully integrated the RAG approach into an intelligent tool that helps companies generate accurate project estimates. Here’s how we did it.

The challenge

Many software design and development businesses struggle to create reliable project estimates based on their historical data. Traditionally, they manually sift through past project details, a time-consuming and error-prone process. We wanted to develop a solution that would automatically leverage historical and real-time data to provide accurate estimates.

Our RAG-based solution

- Data preparation: We began by collecting detailed historical project descriptions and their estimates and split them into manageable chunks.

- Creating embeddings: We then used state-of-the-art AI algorithms, specialized in natural language processing (NLP), to translate the meaning of each data chunk into a numerical vector embedding.

- Storing in a vector database: Storing the embeddings in our vector database created an accessible repository of past project data.

- Retrieval for new projects: When a user uploads a new project description, our system converts it into an embedding, queries our vector database, and retrieves projects with similar descriptions and their historical estimates.

- Augmented query for LLM: The system combines the similar historical projects it retrieved with the new project description and sends the enriched input to the LLM. With this customized information, the LLM generates more precise estimates and timeline predictions for new projects.

Learn more about this AI case study.

The impact

Using RAG, we transformed a labor-intensive task into an automated, highly accurate project estimation system that enhances the estimate precision and produces significant time and cost savings. Our clients now receive data-driven, contextually relevant estimates so they can make informed decisions quickly.

How to Get Started with RAG

Implementing RAG might sound daunting at first, but here’s a simplified step-by-step guide to help you begin:

1. Select your components

- Retriever: Choose a robust vector search engine like FAISS, Weaviate, or Pinecone.

- Generator: Opt for a state-of-the-art LLM such as GPT-4 or a comparable model from Hugging Face.

2. Set up your data pipeline

- Data preparation: Organize your custom data.

- Embeddings: Use a reliable algorithm to convert your text data to embeddings.

- Storage: Store the embeddings in a scalable vector database.

3. Develop the retrieval mechanism

- Query processing: Transform incoming queries into embeddings.

- Similarity search: Implement a similarity search algorithm to fetch the most relevant documents.

4. Integrate and deploy

- Fusion: Combine your query with the retrieved content.

- Response generation: Pass the enriched input to your LLM and deploy the system via an API or interactive interface using frameworks like FastAPI or Flask.

5. Optimize and iterate

- Caching: Cache queries for repeated use.

- Fine-tuning: Adjust retrieval thresholds and other settings to optimize performance.

Conclusion: Transform Your AI with RAG

Retrieval augmented generation (RAG) is a transformative approach that bridges the gap between an LLM’s static training data and the dynamic, real-world information your business relies on.

For startup founders, RAG offers an efficient, cost-effective way to enhance AI capabilities without the burden of constantly retraining AI models. It lets you supply up-to-date, context-specific data so that every query is answered with precision and relevance.

You can leverage RAG to turn a generic AI tool into a specialized asset that can answer critical business questions, offer personalized insights, and support informed decision-making. Whether you aim to improve customer support, streamline internal processes, or generate accurate project estimates like we did at NERDZ LAB, RAG is a smart, scalable, and fundamental AI development tool that adapts to your unique needs.

Ready to Take the Next Step?

If you’re excited about the potential of RAG in AI and want to see how it can transform your startup, we invite you to explore NERDZ LAB’s AI development services. Our team of experts is ready to help you integrate cutting-edge AI solutions that propel your business forward. Contact us today to learn more about how RAG can revolutionize your approach to data and decision-making.